Containers are nothing but a reusable Component in DataStage.

When you developed a logic, same logic needs to apply on other jobs then we can create a container and used in another job.

Type of Container in DataStage

Containers are of Two types

- Local container —> job Specific

- Shared container —> project Specific

Local Container

Used for minimizing the complexity and can only be used in a job.

- Select Some part in the job —> Click edit —> Construct – container —> Local

- It gets packed into a single box called container.

Container —> Data set Container —> Data set Right Click —> De construct (gives original pattern back).

Shared Container

- These are reusable components used with in the project.

- Shared containers help you to simplify your design but, unlike local containers, they are reusable by other jobs.

- You can use shared containers to make common job components available throughout the project. You can create a shared container from a stage and associated metadata and add the shared container to the palette to make this pre-configured stage available to other jobs.

- You can also insert a server shared container into a parallel job as a way of making server job functionality available. For example, you could use it to give the parallel job access to the functionality of a server transform function. (Note that you can only use server shared containers on SMP systems, not MPP or cluster systems.)

- Shared containers comprise groups of stages and links and are stored in the Repository like DataStage jobs. When you insert a shared container into a job, InfoSphere DataStage places an instance of that container into the design. When you compile the job containing an instance of a shared container, the code for the container is included in the compiled job. You can use the InfoSphere DataStage debugger on instances of shared containers used within server jobs.

- When you add an instance of a shared container to a job, you will need to map metadata for the links into and out of the container, as these can vary in each job in which you use the shared container. If you change the contents of a shared container, you will need to recompile those jobs that use the container in order for the changes to take effect. For parallel shared containers, you can take advantage of runtime column propagation to avoid the need to map the metadata. If you enable runtime column propagation, then, when the jobs runs, metadata will be automatically propagated across the boundary between the shared container and the stage(s) to which it connects in the job.

- Note that there is nothing inherently parallel about a parallel shared container – although the stages within it have parallel capability. The stages themselves determine how the shared container code will run. Conversely, when you include a server shared container in a parallel job, the server stages have no parallel capability, but the entire container can operate in parallel because the parallel job can execute multiple instances of it.

- You can create a shared container from scratch or place a set of existing stages and links within a shared container.

- You can use containers to make parts of your job design reusable.

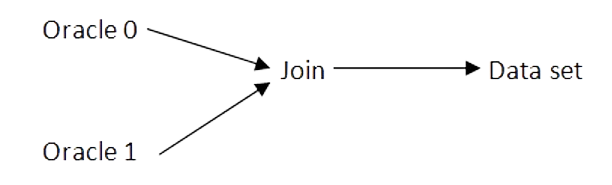

- A container is a group of stages and links. Containers enable you to simplify and modularize your job designs by replacing complex areas of the diagram with a single container stage.